Language Models are Few-Shot Learners (GPT-3)

Abstract

Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic in architecture, this method still requires task-specific fine-tuning datasets of thousands or tens of thousands of examples. By contrast, humans can generally perform a new language task from only a few examples or from simple instructions – something which current NLP systems still largely struggle to do. Here we show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art fine- tuning approaches. Specifically, we train GPT-3, an autoregressive language model with 175 billion parameters, 10x more than any previous non-sparse language model, and test its performance in the few-shot setting. For all tasks, GPT-3 is applied without any gradient updates or fine-tuning, with tasks and few-shot demonstrations specified purely via text interaction with the model. GPT-3 achieves strong performance on many NLP datasets, including translation, question-answering, and cloze tasks, as well as several tasks that require on-the-fly reasoning or domain adaptation, such as unscrambling words, using a novel word in a sentence, or performing 3-digit arithmetic. At the same time, we also identify some datasets where GPT-3's few-shot learning still struggles, as well as some datasets where GPT-3 faces methodological issues related to training on large web corpora. Finally, we find that GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans. We discuss broader societal impacts of this finding and of GPT-3 in general.

What You'll Learn

This paper review explains how OpenAI created GPT-3, a language model that can perform new tasks without being specifically trained for them. Think of it as the difference between teaching someone a skill through extensive practice versus showing them just a few examples and having them figure it out. GPT-3 excels at the latter, which is a significant breakthrough in AI.

Overview

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model introduced in May 2020 by OpenAI. "Autoregressive" simply means the model predicts the next word in a sequence by looking at all the previous words - similar to how autocomplete on your phone suggests the next word based on what you've already typed.

The paper focuses primarily on demonstrating GPT-3's capabilities rather than diving deep into technical architecture details. To fully appreciate GPT-3, it helps to understand the foundational concepts of language models and transformers. If you have basic familiarity with neural networks, here's a learning path that builds up to GPT-3:

- http://karpathy.github.io/2015/05/21/rnn-effectiveness/ - Introduction to recurrent neural networks (RNNs) and how they generate text

- http://colah.github.io/posts/2015-08-Understanding-LSTMs/ - Understanding LSTMs, a type of RNN that remembers long-term patterns

- https://distill.pub/2016/augmented-rnns/#attentional-interfaces - How attention mechanisms help models focus on relevant information

- Attention is All You Need paper - The paper that introduced transformers, the architecture GPT-3 is built on

- http://jalammar.github.io/illustrated-gpt2/ - A visual guide to GPT-2, GPT-3's predecessor

If you're completely new to machine learning, I recommend starting with fast.ai's MOOC, which provides an excellent foundation. My highlights from the paper are below.

Highlights

The Evolution of Language Models

The field of Natural Language Processing (NLP) has evolved through several phases:

- Early days: Models learned simple word representations (word vectors) and used them in custom architectures built for each specific task

- Middle phase: Recurrent Neural Networks (RNNs) created richer, context-aware representations, but still required task-specific architectures

- Current approach: Pre-trained transformer models like GPT-3 can be fine-tuned directly for any task, eliminating the need for custom architectures for each new problem

This evolution represents a shift toward more general-purpose, flexible AI systems.

Understanding Few-Shot Learning

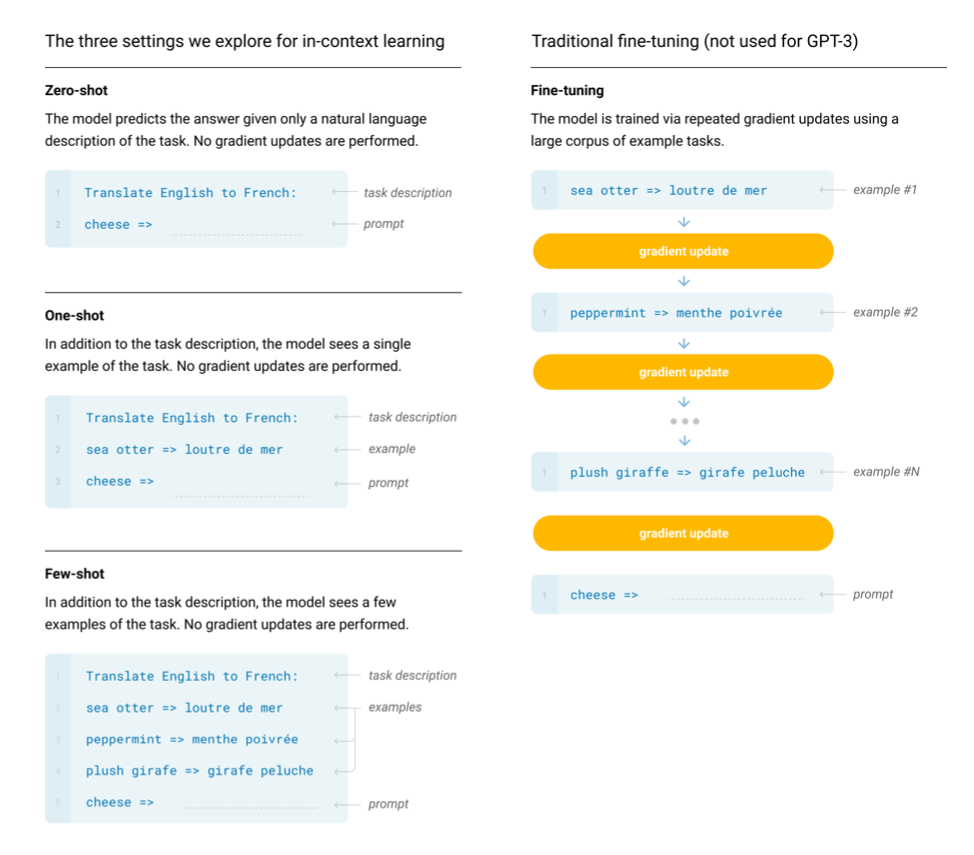

The paper demonstrates three key learning modes, illustrated in the diagram below:

- Zero-shot: Give the model a task description with no examples. It attempts the task purely from the instruction

- One-shot: Provide one example of what you want, and the model infers the pattern

- Few-shot: Show the model a handful of examples, and it learns to generalize from them

The key finding: While zero-shot performance improves steadily with model size, few-shot performance increases much more rapidly. This means larger models are significantly better at learning from context without any parameter updates or training.

Model Architecture and Scale

GPT-3 largely reuses the architecture from GPT-2 with one notable difference: it uses alternating dense and sparse attention patterns (inspired by the Sparse Transformer), which makes processing more efficient.

To understand how model size affects performance, the researchers trained 8 different versions ranging from 125 million to 175 billion parameters - that's a thousand-fold difference. GPT-3's 175 billion parameters make it 10 times larger than any previous dense language model. This massive scale is part of what gives GPT-3 its impressive capabilities.

Previous research suggested that with sufficient training data, larger models should perform better following a predictable mathematical relationship (a power law). This experiment confirmed that hypothesis across many different language tasks.

The Data Contamination Challenge

A significant concern when training on massive amounts of internet data is data contamination: the model might have seen the test questions during training, which would make performance metrics misleading.

This is particularly problematic because:

- GPT-3 was trained on datasets about 100 times larger than GPT-2

- The training data includes huge portions of the internet (Common Crawl)

- Large models can memorize vast amounts of content

However, the good news is that GPT-3 doesn't significantly overfit (memorize) its training data, as verified by testing on a separate validation dataset that was carefully deduplicated from the training set.

Human vs. Machine: Can You Tell the Difference?

As GPT-3 gets larger, an interesting phenomenon emerges: humans find it increasingly difficult to distinguish GPT-3-generated news articles from human-written ones. In fact, detection accuracy approaches random chance (50/50), even though people spend more time examining outputs from larger models.

This raises important questions about AI-generated content detection, which the authors identify as a crucial area for future research.

Broader Societal Impacts

The paper addresses three key concerns about GPT-3's impact:

1. Potential Misuse Applications The technology could be used for harmful purposes like generating disinformation or spam.

2. Bias and Fairness

- Internet-scale biases: Because GPT-3 learns from internet text, it reflects the stereotypes and biases present in that data

- Gender bias example: The model more often describes women using appearance-focused words like "beautiful" and "gorgeous," while using a broader range of descriptive adjectives for men

- The path forward: The authors call for developing shared frameworks that connect the ethical, technical, and empirical aspects of reducing bias in general-purpose AI models

3. External Incentive Structures Competitive pressures and economic incentives around AI development need to be considered when deploying powerful models.

Over the next few Saturdays, I'll be going through some of the foundational papers in Computer Science, and publishing my notes here. This is #21 in this series.